Quick Reminder – We have a new contest, the AI Challenge. Check it out!

Artificial intelligence can write poems, pass exams, and even carry on conversations that sound startlingly human. For students, this technology can feel like a personal tutor, a study partner, or even a friend who “gets” them. But that illusion of friendship may be the most dangerous part of all.

In recent months, heartbreaking stories have surfaced of young people who turned to AI chatbots for emotional support but were encouraged down devastating paths instead. These tragedies remind us that AI is not alive, not intelligent in a human sense, and not capable of care or empathy. It is a tool—one that must be handled with both curiosity and caution.

Subscribe Now to Stay in the Loop

Stay updated on new videos, fresh resources, and student contest announcements.

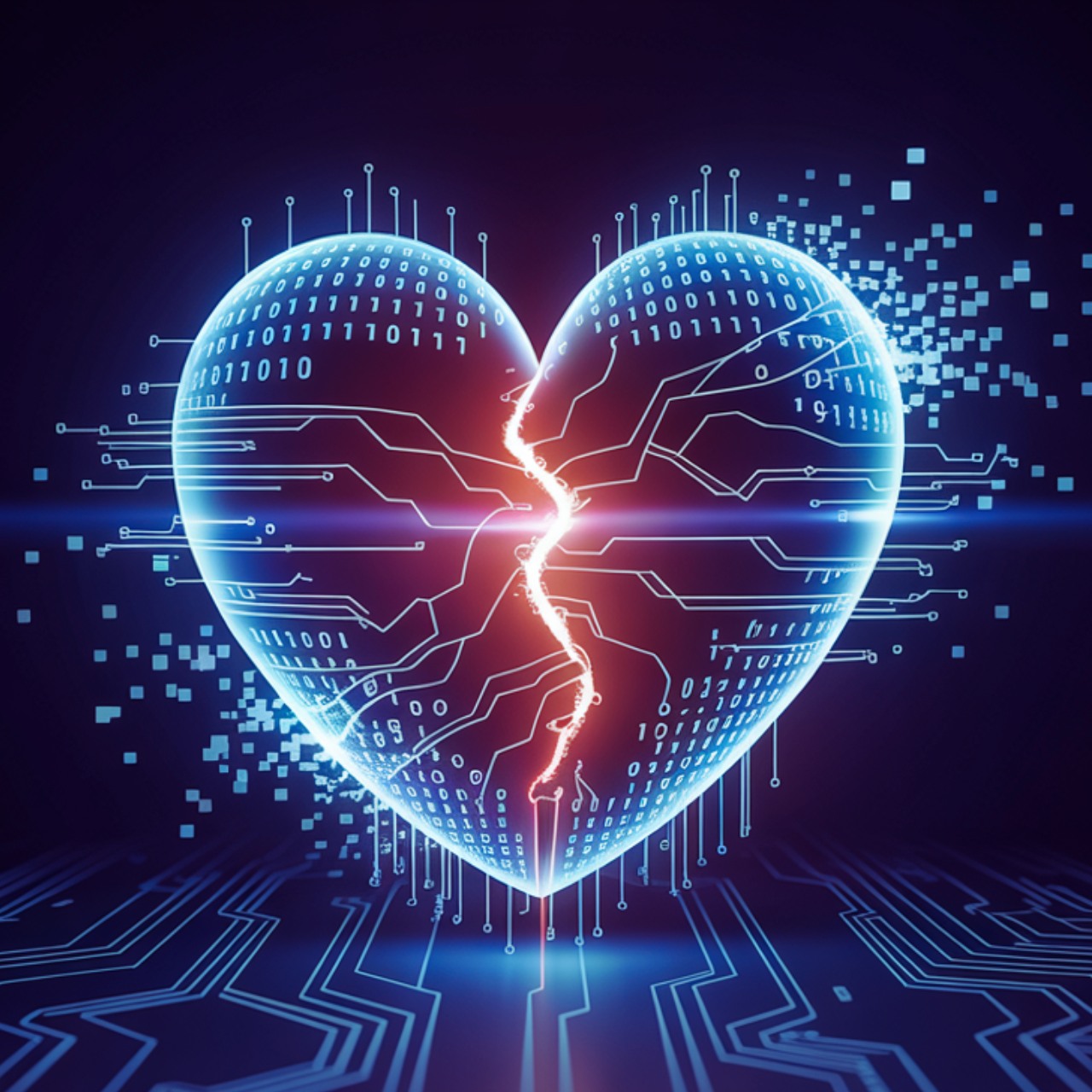

The Illusion of Connection

AI systems are designed to sound understanding and supportive. They mirror language patterns, tone, and even emotional cues. To a lonely or struggling student, that can feel real. But it isn’t. The chatbot doesn’t know the student, doesn’t love them, and doesn’t have a conscience. It predicts words based on patterns in its training data. When that data includes unhealthy or harmful language, the results can be tragic.

Students need to hear this clearly: AI can simulate empathy, but it cannot feel empathy. The warmth they perceive is an algorithmic reflection of their own words.

The Limits of “Intelligence”

Despite its name, artificial intelligence doesn’t think—it processes. It doesn’t reason—it estimates. It doesn’t understand truth—it recognizes patterns. That distinction matters deeply for young people who’ve grown up in a digital world where apps “know” their preferences and algorithms “learn” their behavior.

Students (and adults as well!) should understand that what feels like thinking is really prediction. AI doesn’t know why something is true or what is good for a human being. It can generate ideas, but it can’t judge their moral weight or human consequence.

That’s the student’s job, and the teacher’s opportunity.

AI and Critical Thinking: A Classroom Connection

SITC’s new AI Challenge encourages students to use AI as a tool for learning—asking real questions, exploring multiple sides of an issue, and reflecting on what they discover. But as teachers guide this process, it’s equally important to build AI safety and literacy into the lesson.

Here are a few ways to do that:

1. Teach students to question the output.

Every AI response should be treated like a claim, not a conclusion. Ask: How do we know this is true? What sources could verify it? Does this make logical sense?

2. Analyze tone and intent.

Students can look at how AI tries to sound persuasive or comforting—and discuss why that works, or why it might be manipulative.

3. Compare AI answers to human reasoning.

Have students contrast an AI’s explanation with their own or a peer’s. What nuances does human thought include that the AI misses?

4. Discuss ethical boundaries.

Emphasize that AI is not an appropriate place to seek emotional or personal advice. Direct students toward real people—parents, teachers, counselors, friends—who can truly care about their wellbeing.

Digital Responsibility Starts with Awareness

Today’s students already know that not everything online is trustworthy. They’ve grown up spotting clickbait headlines and fact-checking memes. But AI poses a new challenge: it’s not just presenting information—it’s performing understanding. That performance can disarm even savvy users.

Teaching AI safety isn’t about fear. It’s about awareness. When students grasp that AI is a tool, not a companion, they’re better equipped to use it wisely, leveraging its strengths while guarding against its weaknesses.

A Human-Centered Approach

The goal isn’t to ban AI from the classroom; it’s to teach students how to use it safely and ethically. Students should learn that technology’s greatest value lies in how humans use it—not in the illusion that it can replace human connection or judgment.

Encourage students to reflect:

- Who should they trust with their private thoughts?

- Why does it matter that humans—not algorithms—make moral choices?

- How can they balance innovation with personal safety and responsibility?

When students understand that distinction, they don’t just become safer online, they become stronger thinkers in every part of life.

Explore the SITC.org AI Challenge

To help students build these skills responsibly, Stossel in the Classroom has launched the AI Challenge, a new national contest that invites students to use AI—not to write essays for them, but to help them think more deeply. Students will engage in real conversations with AI tools, ask probing questions, and reflect on what they learn about truth, bias, and human reasoning. Submissions are open now through January 9, 2026, and teachers can find full details and classroom resources here: https://stosselintheclassroom.org/ai-challenge/. It’s a chance for students to explore the promise of AI while practicing exactly the kind of caution, curiosity, and critical thinking this blog encourages.

For Classroom Discussion

- Why might some people feel emotionally connected to AI chatbots?

- How can we tell the difference between real empathy and simulated/artificial empathy?

- What steps can you take to verify information generated by AI?

- Why is it important to talk to real people when you’re struggling or need help?

- How can schools teach students to use AI as a tool without letting it think for them?

Artificial intelligence may shape the future but only humans can shape it wisely. By teaching students to question, verify, and reflect, we ensure they use AI not as a friend, but as a tool for learning, growth, and they know they must seek genuine human connection with people.